“You will eat less than you desire and more than you deserve.” will be referred to as v1 and “You can come back.” will be referred to as v2.

Sadly I have forgotten a lot of details concerning v1 because so much has been done outside of After Effects and I didn’t bother to have a tidy workspace in the project. A quick outline on how these videos came to be, starting with v1:

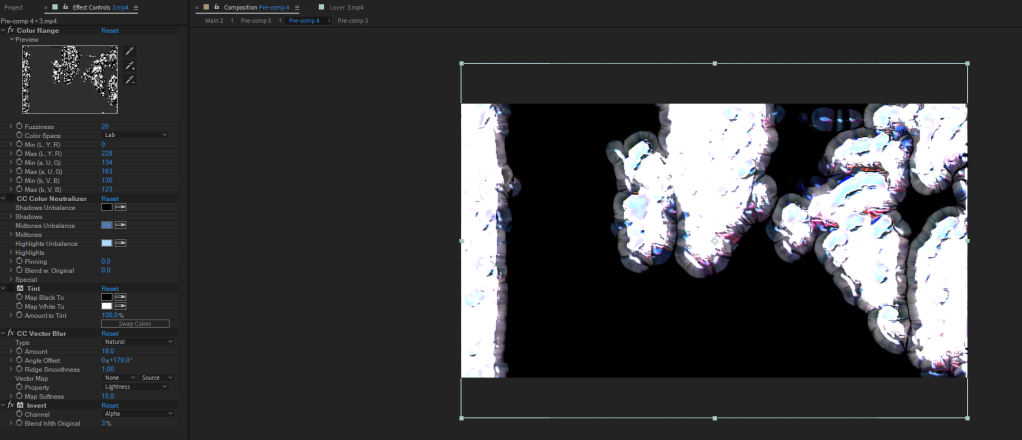

The basis for both videos is Ghost in the Shell: Innocence. The anime has been processed multiple times by the neural network that KoKuToru created (Pixelator). He made quite a few different models for it, so the look can be very different. At the very start I ran it through the NN multiple times while adding effects like Video Gogh and Twixtor in Adobe After Effects. Furthermore I used the results of different models and overlaid them and/or made them alternate via opacity expressions. Turbulent Noise, Invert, Color Range and CC Vector Blur have also been utilized at one point, probably.

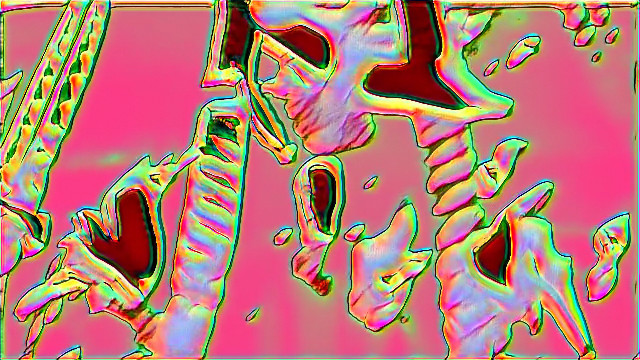

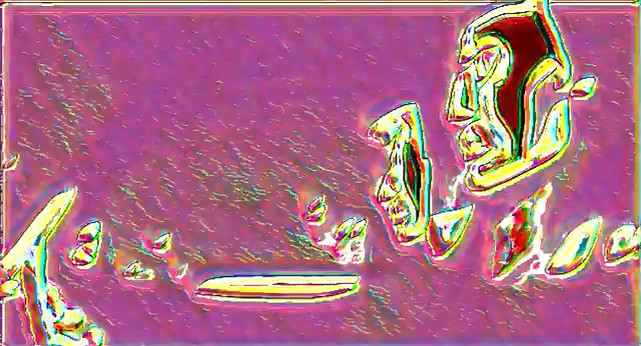

I’m using “probably” because the project file is so fucked up I don’t remember what I used and what I didn’t use. For example, one scrapped version (that came after v1 was done) was made with footage created in Deforum AI as well as RSMB, Displacement Map, Glow and Noise. After firing it through the NN:

Using a song by Merzbow just felt fitting for this footage. I don’t remember at what point I decided on it, but it was relatively early on. After deciding on the song, I wanted the video to express the constant uneasiness of the noise. In the end, it turned out to visualize the noise pretty well without the use of actual noise, or rather it not being dominantly visible. Someone described it as “sperm cells on the carpet dying”. I wonder who that was.

At that point v1 was done. The Mixed Reality contest at amvnews.ru is ongoing and I planned to send it there. But I mixed up and sent it to the Anime Weekend Atlanta Accolades Contest which I thought would be at the end of september, but is in fact at the end of october. Both contests are exclusive so I created a v2, just because I like the idea of the Mixed Reality contest.

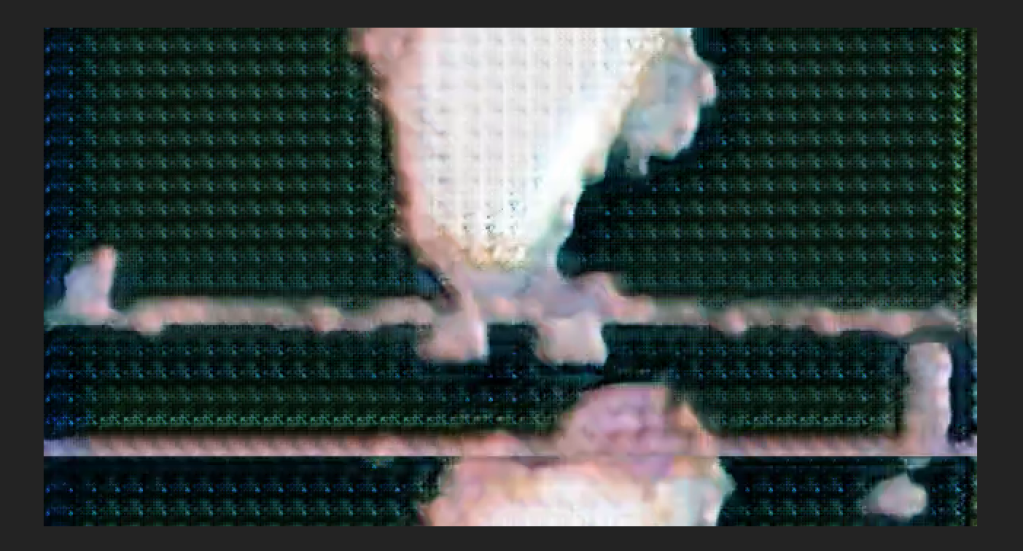

Luckily for me, there were new versions of the NN by now, and one caught my attention specifically. It gave the video a sort of retro green look with a bunch of dots neatly lined up. I put v1 through that once but the videos were still too similar.

At first I was messing around with the framerate and using incorrect Twixtor settings to have it melt a bit more. Eventually RSMB and Glow were added to the mix. Again, with the video getting fucked up, I mean enhanced, by the NN every now and then. Now, a very interesting part: the resolution of the video in AE was 640×342. This caused the NN to bug out a little bit and the video was scrolling from the top to the bottom endlessly, it kind of looked like scan lines. Very cool.

After some fine tuning, it looked more and more like it’s own thing. MrNosec gave me the idea (and many more) to film it with my phone. I whipped out the Panasonic DMC-CM1 with it’s 1 inch sensor from 2014 (ahead of it’s time, but ackschully not a phone but a camera) and started filming my screen in the dark. Unintentionally, the perspective is a bit skewed and the camera was lagging at the start, the autofocus was also not doing it’s job at first. Filming it gave it a nice touch, it added some kind of uncertainty, it became more vague.

I attempted to confuse Warp Stabilizer, unfortunately that didn’t work and Warp Stabilizer just didn’t do what I wanted. So, I used S_Distort with more or less subtle settings, just so the straight lines got broken up a little bit.

Somewhere during that time I also changed the song from Merzbow to a Caretaker song in which I inserted some sound effects (whale noises, under water noises such as thunder striking water, sonars and recordings from outer space, all that edgy stuff) a few years ago. Initially the song was planned for a different video which might or might not get finished, in any case it came in handy.

Still, the very bright parts were lacking in impact. In the AWA Discord, someone said how fitting the video of v1 was to the noise of Merzbow. That gave me the simple idea to have some noise-audio going louder when the screen is brighter. So I turned to Merzbow once more. As I’m lazy, I didn’t want to keyframe it by hand. Thankfully, Andü had the solution to put the value of the current screen brightness in a slider with an expression put on an adjustment layer’s slider:

sampleLayer = effect("Layer Control") ("Layer") color = sampleLayer.sampleImage([sampleLayer.width/2, sampleLayer.height/2],[sampleLayer.width/2, sampleLayer.height/2]); brightness = Math.sqrt(0.299*color[0]*color[0] + 0.587*color[1]*color[1] + 0.114*color[2]*color[2] );

I parented this to the audio levels of Merzbow – Hope and with some quick maths got it to the right volume. These noise shenanigans happened before I recorded the video with the Panasonic.

As the final steps I set two key frames of Twixtor’s Speed attribute at the start and at the end, at 100% and 200% to progressively increase the speed (with no motion vectors, so no actual motion interpolation) and parented noise (VFX) on the brightness slider. Additionally, I applied the posterize time effect on the main video layer and duplicated the layer with a different posterize time value and low opacity to create a ghosting effect. The noise being applied after filming the video with the camera gave it a certain edge, a contrast to the vagueness.

This project was meant to just be a little bit of experiment with the neural network but it turned into something quite interesting and fun. The act of altering footage outside of an editing software has always been interesting to me, in 2019 I made an unpublished AMV in which I converted the video into an audio file and edited the audio in Audacity, then reverted it back to a video. This caused some visual glitches that were cool, but the control I had over it was zero. Compared to what has been done in v1 and v2, there is much more freedom and many more choices.

I want to thank KoKuToru, BBFerdl, MrNosec and Andü.

I also want to thank everybody from the Anime Weekend Atlanta Acoolades contest who commented on my video during the peer review, it gave me the motivation to create v2. It was also very interesting to read about some of the theories. While this started as just a test run for the neural network, I did end up spending a lot of thoughts and time on this project. I did not have a rock solid motive when I started, it grew together with the video. It’s a video where the path was the goal. At the end of the day, I wanted to push boundaries, in a sense. Sometimes I want to, quote: “piss people off while I sit in my basement and laugh”, sometimes I just want to make something visually interesting or put an idea into reality. And sometimes you just have to go with the flow, let things unfold.

This little rambling blog has become quite long already, so to sum it up: v1 is the older brother. He is edgy and agitated. V2 is the little brother, more timid and shy, but he can see specters.

Leave a comment